Content pruning: How deleting 130 million pages improved Trainline’s SEO performance

Your closet is bursting, yet you still feel like you have nothing to wear. Too many outdated clothes are hiding your new, stylish additions. It's time for a spring cleaning. Can you relate? Well, I can.

Many websites are experiencing something similar: often, larger websites accumulate numerous low-value pages. In turn, this makes it harder for Google and users to find the pages that actually provide valuable content. This is especially true today, with the web being flooded by AI-generated content.

Recently, I came across a talk by David Lewis, Trainline’s Senior Tech SEO Manager, detailing Trainline’s multi-year project to declutter their website. By deleting 130 million pages, they managed to significantly increase their average position on Google.

Faulty language setup caused an overflow of low-value pages

Trainline is a popular online platform that allows users to book train and bus tickets across Europe.

The platform is available in multiple languages. In the past, whenever someone searched for a specific connection on the website, the corresponding page would be created in 5 languages.

However, this setup was causing one problem: they had not anticipated the demand for local train and bus connections. For instance, if someone in Germany wanted to take a bus from Freiburg to Crailsheim (a small town in Germany), a page with the route would be created in German, French, English, Spanish, and Italian.

These pages didn’t provide any value. For example, no Spanish users were looking for that connection. As a result, Trainline’s website soon overflowed with numerous low-value pages.

Can too many pages cause issues?

David Lewis, the head of SEO at Trainline, suspected that this setup might lead to crawling issues.

After looking at the crawl data, it was clear that the bloat of low-value pages was causing big issues. Google was crawling valuable pages too infrequently, typically once every 1 to 3 weeks. 36% of all monthly crawls were conducted on pages that held no value for users – a massive waste of crawl budget.

Inadequate internal linking made the problem even worse. Only 36% of pages were covered, leaving 46% as orphaned pages.

Trainline's 2-step strategy to enhance SEO performance

After discovering the severity of the issue, Trainline's team set 3 clear goals:

Every page should be accessible through internal links.

90% of the pages crawled by Google should offer valuable content.

The average position of the optimized* URLs should be improved.

*Optimized URLs refer to URLs that were not previously included in the internal linking architecture but are now being integrated into it as part of the project.

To achieve these goals, Trainline proceeded in two steps.

Step 1: Optimization of the internal linking structure and decluttering of some pages

To achieve 100% internal link coverage, Trainline built a new internal linking architecture. The “Looking for more ideas?” component was created to implement this new structure.

The first two columns direct authority to the most critical train connections, improving Trainline’s ranking potential for the most relevant keywords. The last column displays random routes, serving to finalize internal linking and ensure that all pages are interconnected.

At the same time, 1.5 million low-value pages were orphaned, hoping that these will no longer be crawled and indexed by Google in the future.

After 6 months and an additional 5 million deleted pages, an improvement in crawl performance could be observed. But it wasn’t enough.

Step 2: Removal of another 125 million pages

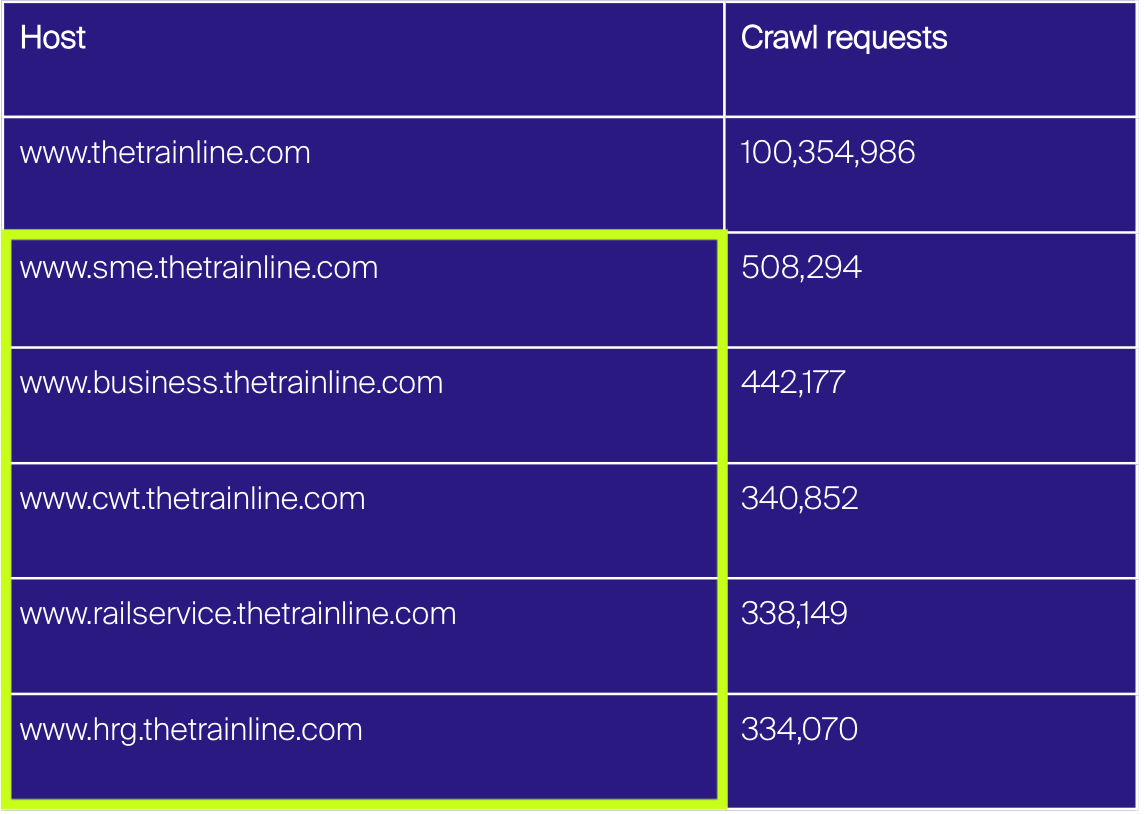

During the project, the Google crawl stats report was launched, and even more low-value pages were discovered. Trainline’s website had a duplicate version of every single UK train times page, across 6 subdomains.

These legacy pages were created before a migration but forgotten afterward. Once Trainline discovered the huge amount of crawl requests going to these subdomains, they decided to delete the affected 125 million pages straight away.

The outcome: the average position on Google improved significantly

After a total of 15 months, cleaning up the website, selecting useful content, and improving internal linking have led to the following results:

The percentage of valuable pages crawled by Googlebot has increased significantly from 64% to 87%.

3x more URLs are now crawled by both Googlebot and Botify every month.

This means that the valuable pages are linked to within the website (crawled by Botify) and are actually found by Google (crawled by Google).

The average crawl depth has decreased from 7 to 5.

Most importantly: Orphaned URLs, which were internally linked for the first time, have experienced an average position improvement of 2, leaping from Position 8 to 6.

This project was conducted a while ago. Looking back, however, it is evident that the boost in the average position of the pages helped generate more organic traffic in the long term. According to Ahrefs, organic traffic has been steadily rising since April 2020.

How would I replicate this?

If you’re in charge of a website that features thousands or even millions of URLs, I suggest going about pruning it the following way:

Identify potential

Use the Google Search Console and tools like Botify, JetOctopus, or Screaming Frog to assess the problem's scope. Discuss this internally with other stakeholders. Remember, SEO is just one channel; others need consideration too.

Prioritize ruthlessly

Taking everything above into consideration - you might want to cut any page that hasn't recorded any organic sessions in the last month. This is because any page that gets crawled but doesn't generate traffic can negatively affect the performance of other pages.

Follow a strict logic

When making cuts, make sure you follow a clear logic. You don’t want to start deindexing pages randomly. There should be specific rules to keep order and to make it easy for anyone else working on the website to understand your decisions.

Monitor your performance

When making major changes to how Google crawls your website, it's important to closely monitor the impact and react quickly if things don't go as expected.